AI-based crawlers, such as GPTBot and ClaudeBot, among others, are designed to interpret content with a deeper context and semantic understanding. As zero-click search results, voice queries, and AI-generated answers gain popularity, ensuring your site is properly crawled and indexed has become more important than ever. This blog explores the importance of AI-based crawlers and indexing, highlighting the future of technical SEO.

Why Are Crawling and Indexing Critical for SEO Visibility?

Crawling and indexing form the backbone of search engine optimisation. These factors determine whether your content has a chance to appear in search results. Here are some reasons that show the importance of a technical SEO audit:

Visibility in Search

Website visibility starts with crawling and indexing. If search engine bots do not crawl your page, it won’t be indexed, eliminating the chances of appearing in search results. No matter how well-designed or content-rich a website is, it holds no SEO value unless search engines can access, interpret, and catalogue its pages.

Timeliness of Content

Search engines regularly crawl websites to look for new content and updates. If you’re adding new products, publishing blogs, or updating services, those changes need to be discovered quickly. A delay in crawling or indexing can mean your competitors outrank you, even if your content is better.

Relevance and Ranking

Indexing doesn’t just store your pages, it analyses them. Search bots analyse keywords, site structure, schema, internal links, and more to determine the relevance of your content to specific queries. The better your content is understood, the better your chances of ranking will be.

Top 3 Crawling and Indexing Challenges

Despite its importance, many websites struggle with crawling and indexing. These challenges can limit your ability to rank and reduce your visibility in organic search. To maintain your website’s visibility at the top of search engines, consider reaching out to professional digital marketing agencies to audit and resolve these issues.

Crawl Budget Limitations

During a website technical audit, search engines allocate a specific crawl budget to each website, which represents the number of pages they will scan within a specified timeframe. Due to this, for large websites or those with poor architecture, important pages may get skipped if bots waste time crawling outdated or irrelevant content.

Duplicate Content

Multiple versions of the same content, such as through URL parameters, printer-friendly formats, or HTTP/HTTPS duplicates, can confuse search engines. This can lead to indexing the incorrect version, negatively impacting your website’s SEO performance.

Poor Link Structures

Inefficient internal linking, such as broken links or overly deep navigation, prevents search engine bots from discovering or reaching certain pages. This reduces crawlability and may result in valuable content being missed.

How AI-Based Crawlers and Indexing Improve Technical SEO Audits?

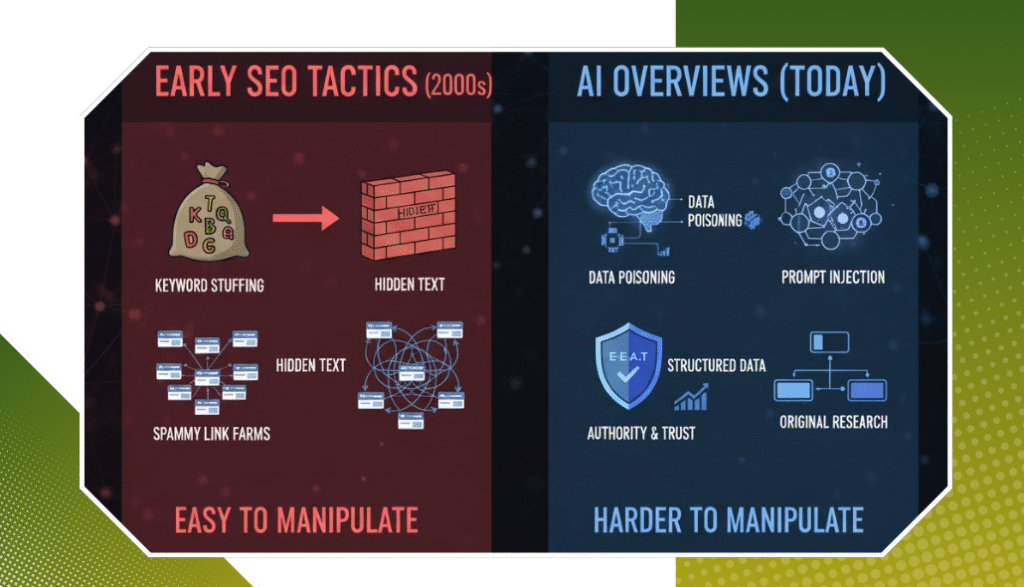

As user behaviour shifts towards AI-powered tools like ChatGPT, Perplexity, and Google’s AI Overviews, the SEO landscape is rapidly changing. According to a 2025 Gartner report, approximately 25% of traditional search traffic is expected to disappear by 2026. This trend highlights that AI is gradually transforming the digital world, including AI SEO optimisation. Here’s how AI is redefining crawling, indexing, and the future of technical SEO audits.

Smarter Content Optimisation

AI-powered tools like Surfer SEO and Clearscope efficiently analyse content and recommend changes, improving the readability and keyword relevance. These suggestions help search engines better understand the content, thereby increasing the chances of being indexed and ranked accurately.

Identifying Gaps and Opportunities

AI tools help you analyse your website like a search bot would to find gaps in your pages, such as poor internal linking, missing meta tags, or missing image alt attributes. Identification of these weaknesses helps improve your site’s crawlability, making AI SEO optimisation and technical SEO audits smarter and more effective.

Structuring for Featured Snippets

Want to appear in a featured snippet? AI helps you format your content in a way that aligns with search engine preferences. It guides you to use lists, tables, and Q/A formats, structures that are commonly selected for Google’s highlighted answers.

Top AI Web Crawlers and Indexing Tools

As search engines evolve, AI-powered crawlers and indexing tools are becoming essential for effective AI website optimisation. Unlike traditional bots that follow links and read code linearly, these AI tools help interpret content contextually and optimise the website for modern search. Here are some leading examples and how they contribute to smarter SEO:

- GPTBot (OpenAI): GPTBot crawls publicly available content to help AI understand language and web content.

- ClaudeBot: Similar to GPTBot, this tool collects content for Anthropic’s Claude models. These crawlers prioritise well-structured, relevant, and clearly written content, increasing their likelihood of being cited in Google AI Overviews.

- Google AI Overviews: This tool is powered by AI models that collect and understand information from various websites.

- AI SEO Tools: Surfer SEO, Clearscope, and MarketMuse use machine learning to analyse top-ranking content, semantic relevance, and content structure.

5 Technical SEO Shifts You Need Now

In the wake of artificial intelligence, users are redefining their methods for discovering information. As a result, websites must reshape their technical SEO to match the expectations of these intelligent systems. AI-based crawlers and indexing do not just scan pages; they interpret context, assess structure, and prioritise quality signals in entirely new ways. To stay ahead, your SEO strategy should focus on being understood by these systems.

- AI crawlers prioritise fast-loading websites, utilise clean HTML, and employ structured schemas.

- Use llms.txt, rich schema, and consistent canonical tags to guide AI on important content.

- Include FAQ sections and Q&A-style pages.

- Optimise your content for visibility in AI summaries.

- Run ChatGPT or Google AI to identify what gets cited.

Conclusion

The digital landscape is now shifting from a search-driven to an answer-driven approach. This change is redefining the foundation of technical SEO. Nowadays, traditional crawling and indexing methods are no longer enough to keep your website competitive. From understanding content intent to identifying indexing issues in real-time, AI website optimisation is setting new standards. At Rankingeek Marketing Agency, we help business owners stay ahead of this shift by offering a combination of manual and AI-driven website technical audits. Book your appointment now!